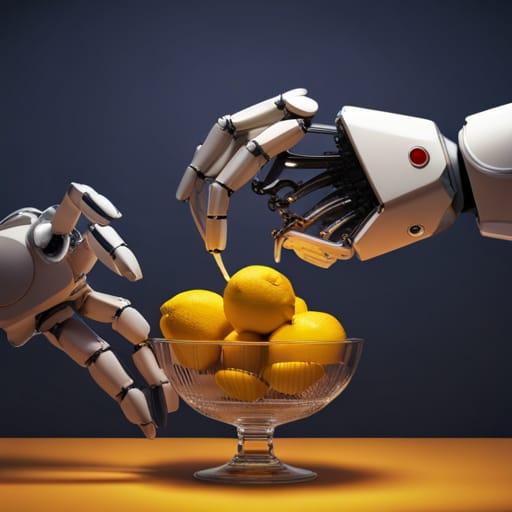

Google DeepMind introduces a new vision-language-action model, RT-2, aimed at enhancing robotics. Trained on web-based text and images, RT-2 can directly output robotic actions, essentially „speaking robot“.

Key Points:

- RT-2 is a vision-language-action model trained on web-based text and images, capable of directly outputting robotic actions.

- Unlike chatbots, robots require a „grounding“ in the real world and their abilities. They need to recognize and understand objects in context, and most importantly, know how to interact with them.

- RT-2 allows a single model to perform complex reasoning seen in foundational models and also output robotic actions. It demonstrates that with a small amount of robot training data, the system can transfer concepts embedded in its language and vision training data to direct robot actions – even for tasks it hasn’t been trained to do.

- RT-2 shows tremendous promise for more general-purpose robots. While there’s still a significant amount of work to be done to enable helpful robots in human-centered environments, RT-2 presents an exciting future for robotics within reach.

For more information, visit the full article on the Google DeepMind Blog.